1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

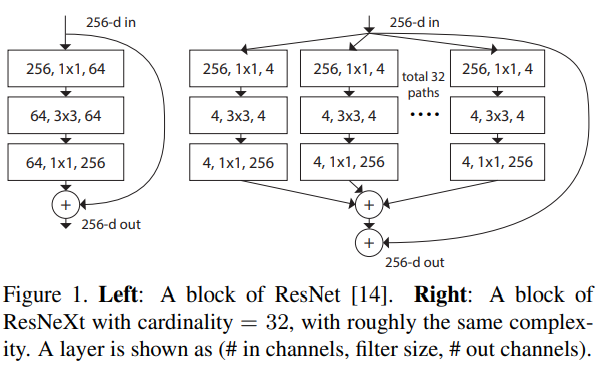

| """resnext in pytorch

[1] Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu, Kaiming He.

Aggregated Residual Transformations for Deep Neural Networks

https://arxiv.org/abs/1611.05431

"""

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

CARDINALITY = 32

DEPTH = 4

BASEWIDTH = 64

class ResNextBottleNeckC(nn.Module):

def __init__(self, in_channels, out_channels, stride):

super().__init__()

C = CARDINALITY

D = int(DEPTH * out_channels / BASEWIDTH)

self.split_transforms = nn.Sequential(

nn.Conv2d(in_channels, C * D, kernel_size=1, groups=C, bias=False),

nn.BatchNorm2d(C * D),

nn.ReLU(inplace=True),

nn.Conv2d(C * D, C * D, kernel_size=3, stride=stride, groups=C, padding=1, bias=False),

nn.BatchNorm2d(C * D),

nn.ReLU(inplace=True),

nn.Conv2d(C * D, out_channels * 4, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels * 4),

)

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels * 4:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels * 4, stride=stride, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels * 4)

)

def forward(self, x):

return F.relu(self.split_transforms(x) + self.shortcut(x))

class ResNext(nn.Module):

def __init__(self, block, num_blocks, class_names=100):

super().__init__()

self.in_channels = 64

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, 3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True)

)

self.conv2 = self._make_layer(block, num_blocks[0], 64, 1)

self.conv3 = self._make_layer(block, num_blocks[1], 128, 2)

self.conv4 = self._make_layer(block, num_blocks[2], 256, 2)

self.conv5 = self._make_layer(block, num_blocks[3], 512, 2)

self.avg = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * 4, 100)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.avg(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def _make_layer(self, block, num_block, out_channels, stride):

"""Building resnext block

Args:

block: block type(default resnext bottleneck c)

num_block: number of blocks per layer

out_channels: output channels per block

stride: block stride

Returns:

a resnext layer

"""

strides = [stride] + [1] * (num_block - 1)

layers = []

for stride in strides:

layers.append(block(self.in_channels, out_channels, stride))

self.in_channels = out_channels * 4

return nn.Sequential(*layers)

def resnext50():

""" return a resnext50(c32x4d) network

"""

return ResNext(ResNextBottleNeckC, [3, 4, 6, 3])

def resnext101():

""" return a resnext101(c32x4d) network

"""

return ResNext(ResNextBottleNeckC, [3, 4, 23, 3])

def resnext152():

""" return a resnext101(c32x4d) network

"""

return ResNext(ResNextBottleNeckC, [3, 4, 36, 3])

|